The Tip of Math Academy’s Technical Iceberg

Our AI expert system is one of those things that sounds intuitive enough at a high level, but if you start trying to implement it yourself, you quickly run into a mountain of complexity, numerous edge cases, lots of counterintuitive low-level phenomena that take a while to fully wrap your head around.

Want to get notified about new posts? Join the mailing list and follow on X/Twitter.

After posting about how our AI works and the high-level structure of our spaced repetition model, one of the many components in our AI expert system, we received the following question:

Aren’t you concerned with such great information you’re giving your secret sauce to the competition?

Here’s the answer to that question.

The technical details revealed in those posts are just the tip of the iceberg. Our AI expert system is one of those things that sounds intuitive enough at a high level, but if you start trying to implement it yourself, you quickly run into a mountain of complexity, numerous edge cases, lots of counterintuitive low-level phenomena that take a while to fully wrap your head around.

In some loose sense, it’s kind of like how Newtonian physics seems simple enough at a macro level but once you go down a few levels of scale you get into this weird WTF realm of quantum mechanics and where it’s a real struggle to tame the complexity. Actually, the analogy runs pretty deep – topics connected up in the knowledge graph are like atoms connected up by forces, and even within topics, the internal dynamics are so complicated that we literally refer to it as a “quantum state” in conversation.

To put this in perspective: the system is so large, so complex that I’ve been creating a large technical document for myself to keep all the math / theory details straight in my head. It’s at about 100 pages right now, but only halfway done, and it’s just the mathematical theory and algorithm implementation notes, nothing to do with the actual structure of the code.

That spaced repetition model structure that we posted is a simplification of a bunch of math that takes over 20 pages to describe in proper detail (and it’s very dense like a textbook or research paper).

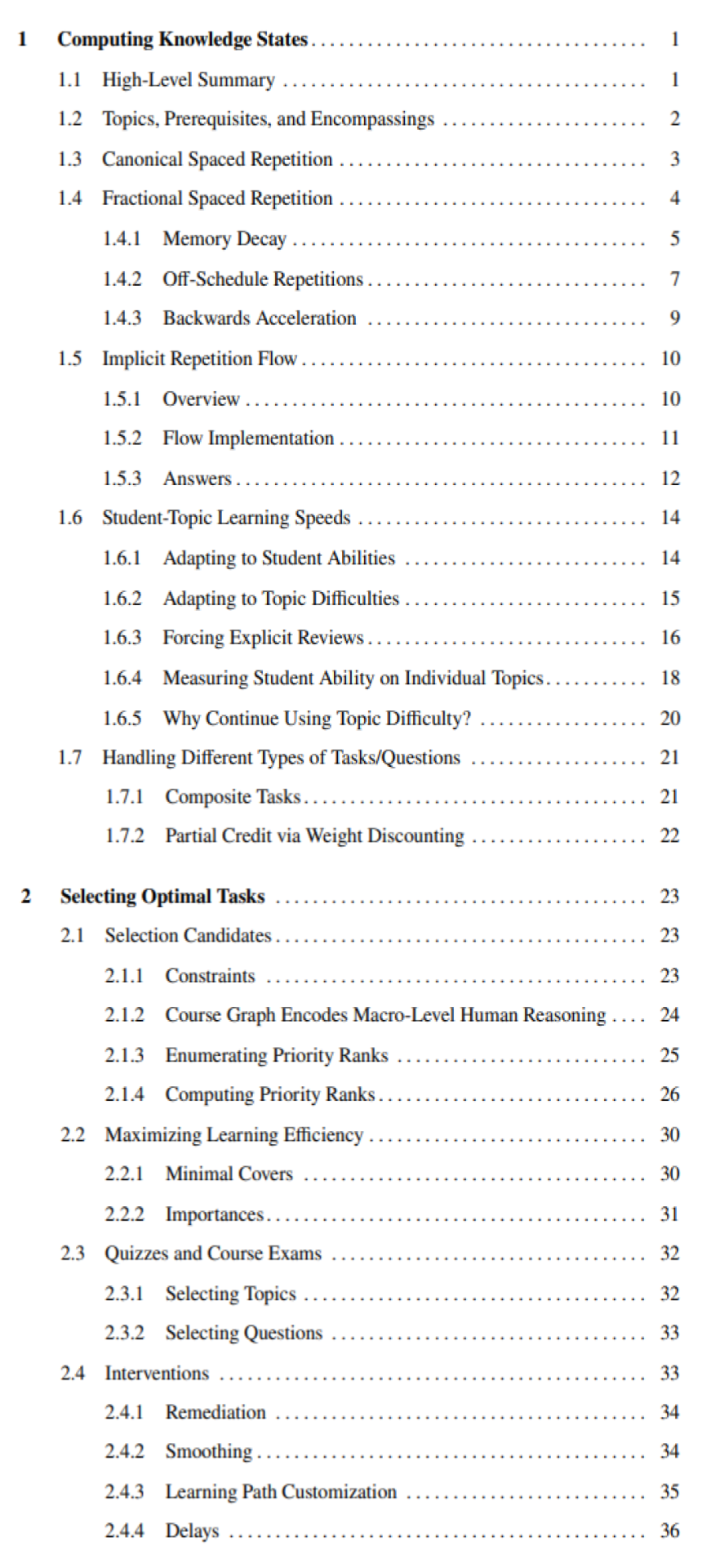

For reference, this is the table of contents for just the first two chapters.

Those two chapters don’t even touch on all the theory behind

- how diagnostics work,

- how XP is calibrated,

- how course completion dates are estimated,

- how we are able to run stuff quickly in seconds as opposed to minutes (there's lots of tricky caching stuff that has to happen),

- how we keep all this stuff working properly while making updates to the production knowledge graph,

- how the model validates and repairs itself in the event that defects are somehow introduced into the underlying knowledge graph data,

- how we validate the decisions made by the model in real-time and also over the long term to make sure it's behaving reasonably,

- and so on.

Also, in order for all this stuff to work properly, you need a mountain of content that is structured in proper knowledge graph form, broken down in proper granularity with tons of domain-expert knowledge encoded (not just prerequisites but also other types of relationships). So even if you managed to build a reasoning system like this, you couldn’t just slap it onto a bunch of loosely structured content and have it work properly (even Khan Academy’s content is nowhere near structured enough).

Want to get notified about new posts? Join the mailing list and follow on X/Twitter.