The Brain in One Sentence

The brain is a neuronal network integrating specialized subsystems that use local competition and thresholding to sparsify input, spike-timing dependent plasticity to learn inference, and layering to implement hierarchical predictive learning.

Want to get notified about new posts? Join the mailing list and follow on X/Twitter.

The brain is quite complex. Even individual cells of the brain are beyond our complete understanding. However, when we step back and take a bird’s-eye view of the brain, we can see a method to its madness.

This article will proceed as follows:

- We'll summarize the brain in a single sentence.

- The sentence will seem like gibberish.

- We'll use simple terms to build up our knowledge base so that we can understand more and more of the sentence.

- When we've rebuilt the complete sentence, you will see how aspects of the brain fit like puzzle pieces, and the sentence will make sense.

Ready? Here is the brain, in a single sentence:

The brain is a neuronal network integrating specialized subsystems that use local competition and thresholding to sparsify input, spike-timing dependent plasticity to learn inference, and layering to implement hierarchical predictive learning.

The above statement by no means encapsulates all intricacies of the brain, but it does bundle together many of brain’s essential functional principles – at least, those that we currently know about. Let’s break it down.

The brain is a network.

A network is just a bunch of interconnected things. In social networks, people and social groups are connected through communication. In computer networks (such as the Internet!), computers can transmit data to one another.

A social network. (source)

The brain is a neuronal network.

Just as social networks are interconnected social groups and computer networks are interconnected computers, neuronal networks are interconnected neurons.

A neuron is a type of biological cell that connects to other neurons and communicates via fluctuations in its electric field. Although there are many intricacies to neurons, we will take a very simplified view: we will think of a neuron as “active,” or “on,” when its electric field deviates from its normal “inactive” or “off” state.

A neuron. (source)

A neuron’s state is mainly determined by the states of its neighbors, the other neurons that connect to it. If enough neighbors are on, the neuron will turn on. Otherwise, the neuron will stay off.

Although this may seem like a very simple scenario when we have a couple neurons, try to imagine all the possible ways that just 100 neurons can be on or off! Depending on the way the neurons are connected, there can be up to $2^{100} \approx 10^{30}$ possible network states! Now try to imagine all the possible states of the brain, which many sources estimate has roughly 100 billion neurons. The number of neurons in the brain is greater than the number of people in the world, and the number of possible network states of those neurons is, for all practical purposes, infinity.

The brain is a neuronal network integrating specialized subsystems.

The brain is a system. It contains subsystems that gather and process information. Our senses – sight, touch, taste, hearing, etc. – are subsystems of the brain that gather information from our environment. Other subsystems keep track of what is going on inside of our body. Each subsystem is specialized for a particular function – for example, to enable sight, our eyes contain specialized receptor cells that keep track of the color and intensity of light that we encounter.

Our brain combines, or integrates, these specialized subsystems so that synergy can occur: by interacting and cooperating with each other, the specialized subsystems can process information more efficiently than they could by working separately. To experience your brain integrating specialized subsystems, imagine that you heard the sound “woof” Although the spoken “woof” is not an image, you might visualize a dog. You might imagine petting a dog and feeling its fur, too. By linking your senses together, your brain can better represent the idea of a dog.

The brain is a neuronal network integrating specialized subsystems that use local competition.

Let’s start with the idea that a neuron might be active when you look at a coffee cup, but not when you look at a cheetah. (Such neural selectivity is occurs, for example, in the primary visual cortex: some neurons turn on in response to bars, while other neurons turn on in response to edges.)

A cheetah. (source)

Representing object images with neurons is straightforward when neurons have drastically different selectivities. When we see a cheetah, our cheetah-selective neurons will clearly be active, and our coffee cup-selective neurons will clearly be inactive.

A coffee cup. (source)

However, at a glance, we might be unsure whether we saw a cheetah or a leopard, because cheetahs and leopards share many similar characteristics.

A leopard. (source)

In other words, when neurons have similar selectivities, it is more difficult to decide which neuron should become active.

Local competition solves this issue. Neurons that represent similar features tend to be located spatially close to one another, and when neurons become active, they often activate a surrounding pool of inhibitory neurons. When these inhibitory neurons are active, they try to inactivate nearby neurons. The end result is a game of king-of-the-hill where neurons that represent similar features will fight to represent that feature, and only the most-active (i.e. best-feature-matching) neurons will stay active. This is why, for example, once you’ve recognized the woman in the optical illusion below as being either young or old, you might have trouble seeing the other perspective.

Young woman or old woman? (source)

The brain is a neuronal network integrating highly specialized subsystems that use local competition and thresholding.

Thresholding is a way of putting just-barely-active neurons out of their misery. If you try to play king-of-the-hill with a smart-aleck, the smart-aleck will put one foot on the base of the hill and claim that they have not lost because they are still technically on the hill (even though it is obvious that you have won). Similarly, it would be quite inefficient if losing neurons remained slightly active once an equilibrium had been reached. Not only would their activity waste energy, but it would also prevent the winning neurons from being as active as they could otherwise be. We already know the winning neurons, so why should the losers remain slightly active?

The solution to king-of-the-hill with a smart-aleck is to play the game on a plateau rather than a hill – either your feet are on the plateau, or they’re on the ground. There is no in-between.

Thresholding: either you're on, or you're not. (source)

Neurons use an analogous solution: thresholding. A neuron does not turn on until it receives input that passes a particular level, called a threshold. Once the input is above the threshold, the neuron begins to turn on. This way, losing neurons that receive a slight amount of input remain completely off, since their slight amount of input is below the threshold.

The brain is a neuronal network integrating specialized subsystems that use local competition and thresholding to sparsify input.

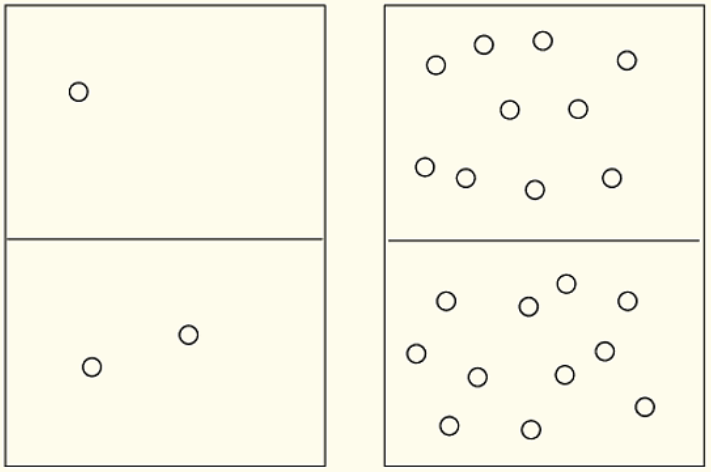

When your cupcake has very few sprinkles, you might say that the sprinkles are sparse, the opposite of dense. Although sparse sprinkles might sadden you, sparse neuronal representations should make you happy – they are much easier to process than dense representations, largely because they are much easier to classify. For example, look at each of the boxes in the following image and try to decide whether the top or the bottom has more circles.

Sparse (left) vs. non-sparse (right).

As seen easily in the left sparse box and not-so-easily in the right non-sparse box, the bottom of each box has more circles.

Sparse neural representations consist of strong activation of a relatively small set of neurons. They facilitate information processing and classification in our brain, and the whole purpose of neuronal king-of-the-hill on a plateau is to generate sparse representations.

The brain is a neuronal network integrating specialized subsystems that use local competition and thresholding to sparsify input, and learn inference.

Easy-to-work-with, easily-classifiable information is extremely advantageous to the brain. However, if all our brains could do was classify, we all would die pretty quickly. Using only classification, you could recognize that the object approaching you at a speed of 50 miles per hour is a Ferrari, but you could not predict what will happen if you don’t get out of it’s way. Without a neuronal prediction mechanism, we’d all eat pavement.

Learning inference is essential to survival. We need to be able to predict, or infer, what will happen in various situations, so that we may act in best interest of our vitality. Whenever something happens, we need to be able to infer what will happen next.

The brain is a neuronal network integrating specialized subsystems that use local competition and thresholding to sparsify input, and spike-timing dependent plasticity to learn inference.

To learn inference, our brains change in response to newly-acquired information. For this reason, our brains are said to be “plastic” – they can be molded by experiences.

Our brain learns inference by modifying connections between neurons according to spike-timing dependent plasticity. If neuron A turns on and neuron B turns on right afterward, then event A may have caused event B, but event B could not have caused event A. Consequently, the A $\rightarrow$ B connection is strengthened, while the B $\rightarrow$ A connection is weakened.

This is similar to inference in real life: if you flip a switch and a light turns on afterward, you would infer that flipping the switch causes the light to turn on, but you would not infer that that unscrewing the light does anything to the switch.

The brain is a neuronal network integrating specialized subsystems that use local competition and thresholding to sparsify input, spike-timing dependent plasticity to learn inference, and layering to implement hierarchical learning.

The brain includes layers of neurons that are structured like a human pyramid. In a human pyramid, the first (bottom) layer of humans provides a base for the second layer, the second layer provides a base for the third layer, and so on. That is, each layer of humans depends on its contact with humans in the layer below (aside from the first layer, which depends on its contact with the ground).

A human pyramid. (source)

Similarly, in the brain, each layer of neurons receives inputs from neurons in the layer below (aside from the first layer, that uses the senses to gather information about the environment). In order for a neuron to turn on, it must receive enough input from neurons in the previous layer.

This setup implements a hierarchy of abstraction: active neurons in the first layer summarize information that the senses have gathered, active neurons in the second layer summarize information that is encoded in the first layer, active neurons in the third layer summarize information that is encoded in the second layer, and so on.

As we move up the hierarchy, neurons gain greater “meaning:”

- If a bottom-level neuron is active, it means that the senses have recorded a particular stimulus pattern.

- If a second-level neuron is active, it means that there is a particular pattern in the bottom level, so there is a particular pattern of stimulus patterns.

- If a third-level neuron is active, it means that there is a particular pattern in the second level, so there is a particular pattern of patterns of stimulus patterns, and so on.

Thus, higher levels in the hierarchy can represent more complex concepts.

The brain is a neuronal network integrating specialized subsystems that use local competition and thresholding to sparsify input, spike-timing dependent plasticity to learn inference, and layering to implement hierarchical predictive learning.

Each layer sends input to the layer above, sending information up the hierarchy. However, feedback connections also exist: neurons in higher layers sometimes send input to neurons in lower layers. Feedback connections allow information to travel down the hierarchy, and information flow down the hierarchy results in predictions.

To see feedback predictions at work, recall the first time you saw Lord Voldemort (if you’re not familiar with the Harry Potter series, take a look at the bald person in the picture below).

Voldemort is on the right. (source: droo216)

Because sufficiently many facial features (e.g. eyes and a mouth) were present on Voldemort’s head, your face-selective neurons received enough input to become active, and you recognized Voldemort as having a face.

However, you may have felt that something just wasn’t normal about Voldemort’s face. This is because you predicted something that doesn’t exist.

Whenever you see facial features, your face-selective neurons turn on. You soon see other facial features, so the connections from your face-selective neurons to the other facial-feature-selective (e.g. ear-selective and nose-selective) neurons are strengthened due to spike-dependent plasticity. Thus, your face-selective neurons also send input to neurons that are selective to facial features.

After your eye-selective and mouth-selective neurons turned on and activated your face-selective neuron, your face-selective neuron sent input back to them as well as to other facial-feature-selective neurons such as ear-selective and nose-selective neurons. In other words, seeing eyes and a mouth led you to predict a face, and predicting a face led you to predict ears and a nose. Indeed, Lord Voldemort has ears… but he has no nose! Voldemort’s face doesn’t look normal because you predicted a nose, and he has no nose!

Congratulations! You now have the ability to describe the basics of the brain in a single sentence!

However, before you have too much fun with your new superpower, you should know about about some things that were left out of this discussion. Although we ignored these topics for the sake of simplicity and big-picture-ness, they are also important for a holistic view of the brain.

- Action potentials are stereotyped fluctuations in neuronal electric fields. When a neuron is on, it is really only on for a brief moment, during which the action potential, or spike, takes place. Then, the neuron enters a refractory period, a brief time interval during which it is unable to spike.

- Although a neuron can be thought of as "on" or "off" according to its spiking rate, important temporal aspects of neuronal activity, such as synchrony, are coming to light. It is thought that synchronous spiking represents binding of features as part of a single object.

- Spike-timing dependent plasticity is a simplified type of learning rule -- there are actually many different kinds of neural plasticity. It seems that many forms of plasticity arise from a calcium learning model, which is currently being investigated. We did not cover the calcium learning model because it is not yet well-understood and it is not as simple to explain.

- There are also many different types of neurons. Throughout our discussion, we assumed that neurons excited each other (although we did briefly encounter inhibitory neurons). Though neurons do fall into the general classes of "excitatory" and "inhibitory," there exist different types of neurons that are specialized for particular functions.

- In order to use its classification, prediction, and deep learning abilities, the brain must control a body. The embodied brain controls the movement of the body through space in a way that optimizes its survival and allows it to gain needed information. The spinal cord plays a large role in the operation of the embodied brain.

Want to get notified about new posts? Join the mailing list and follow on X/Twitter.