Cutting Through the Hype of AI

Media outlets often make the mistake of anthropomorphizing or attributing human-like characteristics to computer programs.

This post is part of the series A Primer on Artificial Intelligence.

Want to get notified about new posts? Join the mailing list and follow on X/Twitter.

Media outlets continue to write stories about AI – and today especially, deep neural networks – that are grotesquely sensationalized, often to the point of anthropomorphizing or attributing human-like characteristics to computer programs.

For example, consider the headline Google Supercomputer Gives Birth To Its Own AI Child. (This is a real headline; if you Google it you’ll find plenty of articles with similar headlines.)

The way the headline is phrased, it would lead you to think that Google researchers built a futuristic computer, and that computer somehow gave birth to a human-like child that is composed of circuits rather than flesh and blood.

Source: The Daily Star

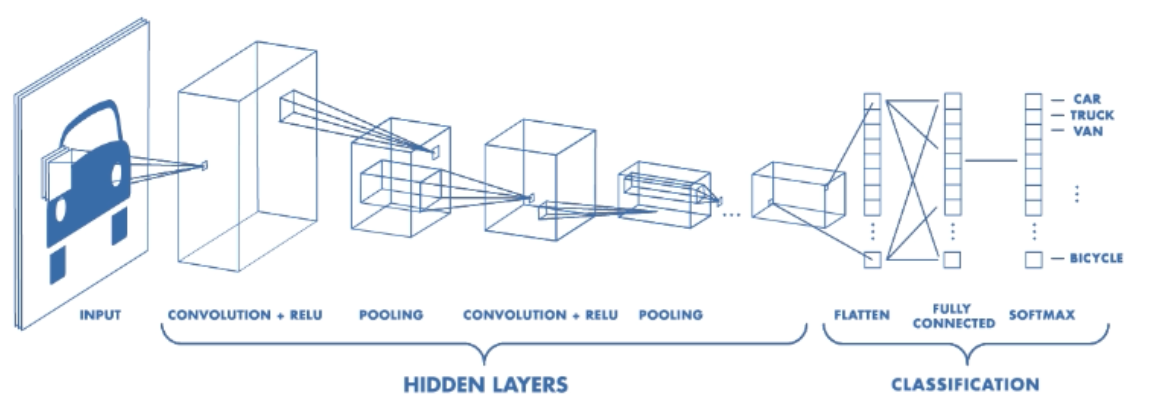

But this is simply not true. Rather, Google researchers built a model to predict a good architecture, or connectivity arrangement, for another model that was meant to perform a specific task. Usually, architectures are chosen by researchers building a model, but in the case of this article, researchers built another model to predict which architecture would lead to good performance on a specific task.

Diagram of a sample model architecture for a convolutional neural network.

In some analogy, then, the model being trained to perform the specific task is like a “child,” and the model predicting a good architecture for the child model is like a “parent.” But did this parent model give birth to the child model in a human-like way? Does the child model resemble a human-like child? The answer to both of these questions is a resounding no.

If someone didn’t realize that the headline Google Supercomputer Gives Birth To Its Own AI Child was just a loose analogy, then they might begin to develop ridiculous opinions about AI based on the false assumption that computers can literally give birth to AI children resembling human children. For example, an uninformed pessimistic reader might argue that we need to stop AI research because a growing population of AI children will pose a threat to the human population. Or, an uninformed optimistic reader might argue that we must rush to pass laws supporting the equality of AI children, lest they be discriminated against. Both arguments are irrelevant to the present state of AI.

The takeaway of this discussion is that when you read about AI in the news, you should be skeptical of titles that appear sensationalized or anthropomorphized. If some statement about the field of AI is too good (or too bad) to be true, then it probably isn’t true in a literal sense. This will become increasingly apparent as you learn more about the technical underpinnings of AI.

This post is part of the series A Primer on Artificial Intelligence.

Want to get notified about new posts? Join the mailing list and follow on X/Twitter.