Bottom-Up Versus Top-Down Arguments in Machine Learning Education

Want to get notified about new posts? Join the mailing list and follow on X/Twitter.

“Bottom-up vs top-down” arguments in ML education never end well because there is little agreement on where the bar is for what it means to learn ML.

On one extreme, some people drop the bar really low, sometimes all the way down to “you know ML if you can use an off-the-shelf library to complete a run-of-the-mill project, you don’t need to know what’s going on under the hood, you don’t need to be able to read/implement research papers, you don’t need to be able to customize for novel use-cases that pop up when you’re trying to innovate.”

(Nowadays some people will even claim they do ML/AI if all they’re doing is chatting with an LLM, and they’ll tell you that not only do you not need to know much math, but you don’t need to know much coding either – anyone can learn ML/AI in a day, you just throw a coherent prompt into an API call.)

On the other hand, some people raise the bar so high that you only meet it if you’re publishing mathematical theorems about ML theory. You ask them how much math you need for ML, they’ll point you at a textbook like [Abstract] Algebra, Topology, Differential Calculus, and Optimization Theory For Computer Science and Machine Learning that weighs in at nearly 2,200 pages peppered with stuff like Zorn’s Lemma, Group Theory, Quaternions, … none of which are needed to read/implement most research papers and customize for novel use-cases that pop up when you’re trying to innovate.

Here’s what I consider to be the most pragmatic, actionable, and efficient approach to learning serious ML:

Plan your broad-strokes journey top-down, but carry out the granular steps bottom-up.

The top-down approach can be useful for planning a broad-strokes learning journey towards a goal. For instance, if you want to learn ML, then you can think top-down to figure out what fields of math you need to learn in order for machine learning to become accessible to you. You’ll find that you absolutely need to learn calculus, linear algebra, and prob/stats, and you can skip stuff like abstract algebra, number theory, etc.

However, the granular steps of the journey, the actual learning, needs to be carried out bottom-up.

For instance, are you really going master computing neural net weight gradients via backpropagation by asking “what does that squiggly ‘d’ mean,” “why do you have to chain-multiply the derivatives like that,” “how do you calculate the derivative of any activation function,” etc., all the way down to the depths of whatever is the last piece of math you’ve mastered?

No, all you’re going to do with those questions is create a roadmap of what you need to learn. Which is essentially a calculus course. Except your roadmap will be terrible because you don’t actually know the subject yourself – it will have all sorts of gaps that you don’t even realize are missing because, which is to be expected given that you don’t actually know the subject.

You’ll try to climb back up the skill tree implied by your incomplete roadmap and you’ll repeatedly get stuck trying to climb up to the next branch that you can’t reach because there are prerequisites that you don’t realize you’re missing.

Most people in this situation will eventually just give up due to all the friction. Only those who have extremely outsized perseverance and generalization ability have any chance of fighting through and making it to the other side. And even then, it will take longer (and they’ll likely end up with more holes in their knowledge) than if they just sucked it up and worked through a well-sequenced calculus course.

Anyway, I’ll probably circle back and write more about this once our “serious ML” course sequence is done (not just the first course, but the full course sequence all the way up through transformers, diffusion models, DDPGs, etc.) – which should make it clear where the bar is (i.e., what constitutes “serious ML”), how much math you need to clear the bar, why you need that math (i.e., what exact ML stuff is it a prerequisite of), and how vast a chasm there is between the level of math that most aspiring “serious ML” learners know and level they really do need to get to, to achieve their “serious ML” aspirations.

By the way, our approach comes from teaching this stuff manually to high schoolers for several years with great success:

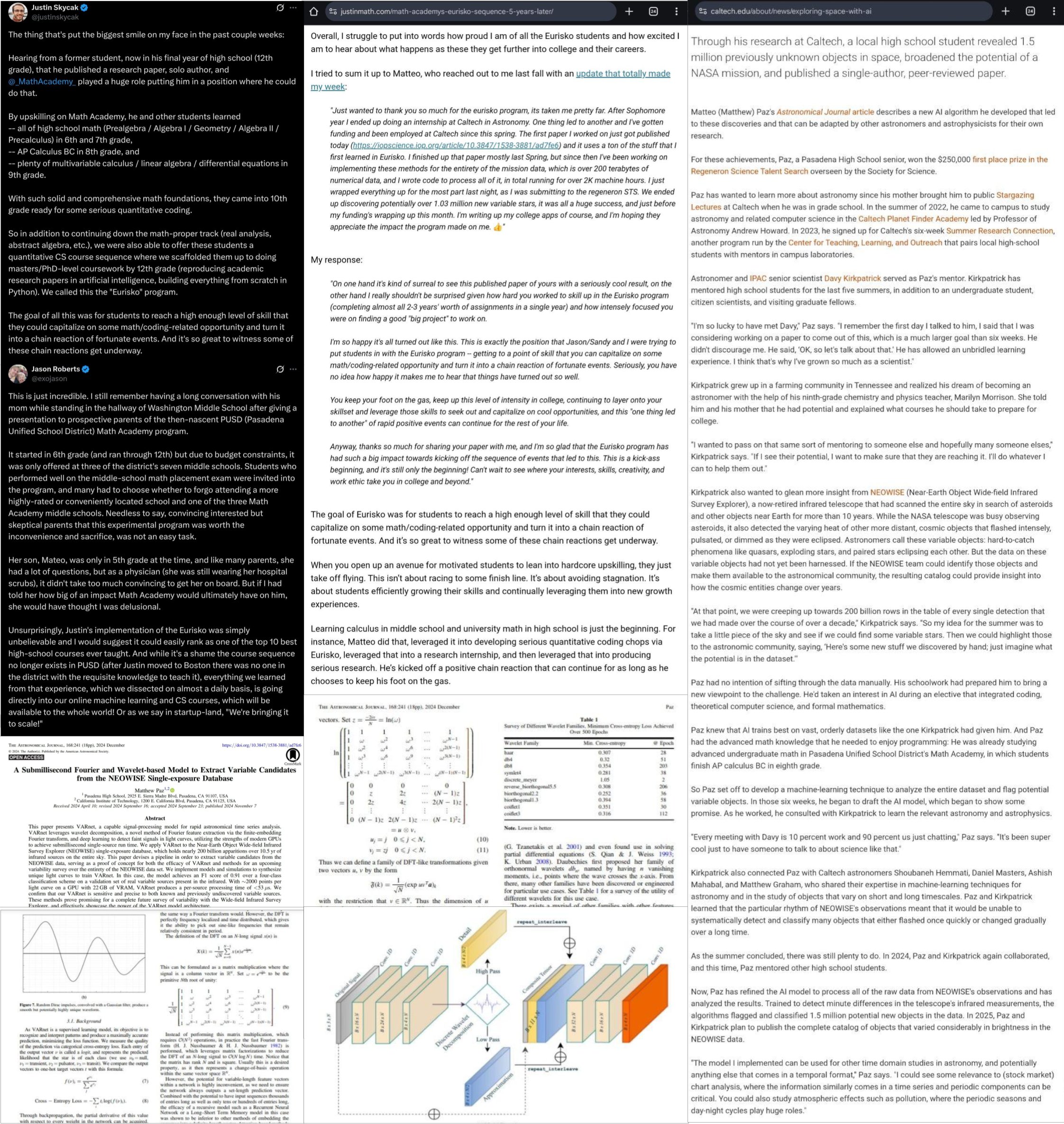

E.g., one of them won 1st place ($250,000) in last year’s Regeneron Science Talent Search for developing a model that “revealed 1.5 million previously unknown objects in space, broadened the potential of a NASA mission” (a direct quote from Caltech’s website) and published his results solo-author in The Astronomical Journal. (2025-12-27 update: he also got personally recruited by the head of NASA, with a fighter jet ride as a signing bonus.) More info in the image below:

Lastly, I want to be clear that I have nothing against ML projects. Hands-on, guided projects that go beyond textbook exercises are important, we will be including plenty of projects in our own ML courses, and I even recommended some project-heavy learning resources as part of a ML roadmap that I put together last year. There is a clear benefit to doing projects and I’m not trying to steer anyone away.

What I am saying, though, is that in my experience as well as my read of the science of learning literature: Projects are great for pulling a bunch of knowledge together and pushing it further, but when a student is learning something for the very first time, it’s more efficient to do so in a lower-complexity, higher-scaffolding setting. Otherwise, without a high level of background knowledge, it’s easy to get overwhelmed by a project – and when a student is overwhelmed and spinning their wheels being confused without making much progress, that’s very inefficient for learning.

Follow-Ups Questions

Q: I dropped out of HS, self taught programming and now a soft eng. Very unimpressive background. Wanted to learn ML so I started with FastAI courses. However, it quickly made me realize that beyond doing simple stuffs solving simple problems, if I wanted to go deeper and truly understand the “basic” of how models actually work under the hood, I need some mathematical foundation. Ofc being HS drop out, I had 0 math foundation so I had to start from ground up. I started with literally fcking Algebra lol… but then pre-calc, calc 1/2/3, then linear algebra, then probability and statistics, and now studying optimization and finishing up the Stanford course ISL with python. Still a loooooong way to go before I am any useful in ML, but grateful for journey. My conclusion in this argument is that you really need BOTH. The order of which you do it depends on what you suck at most. The end.

A: Thanks for sharing your story! Yeah, sounds kind of like stage 1 vs stage 2 of Bloom’s talent development process. In stage 1 you focus on building awareness/interest/motivation while exploring the talent domain, in stage 2 you commit seriously to performance improvement as the primary goal.

Want to get notified about new posts? Join the mailing list and follow on X/Twitter.